The massive computers of the 1950s made technology inaccessible to most people. Then came the 1960s PDP-8 mini computer, changing everything at a fraction of the cost. Schools and small labs could finally afford computing power. Microchips made devices smaller while BASIC code made programming accessible to beginners. The Unix operating system introduced efficient resource-sharing between users.

These innovations solved the biggest technology problems of their time and built the foundation for devices we rely on today.

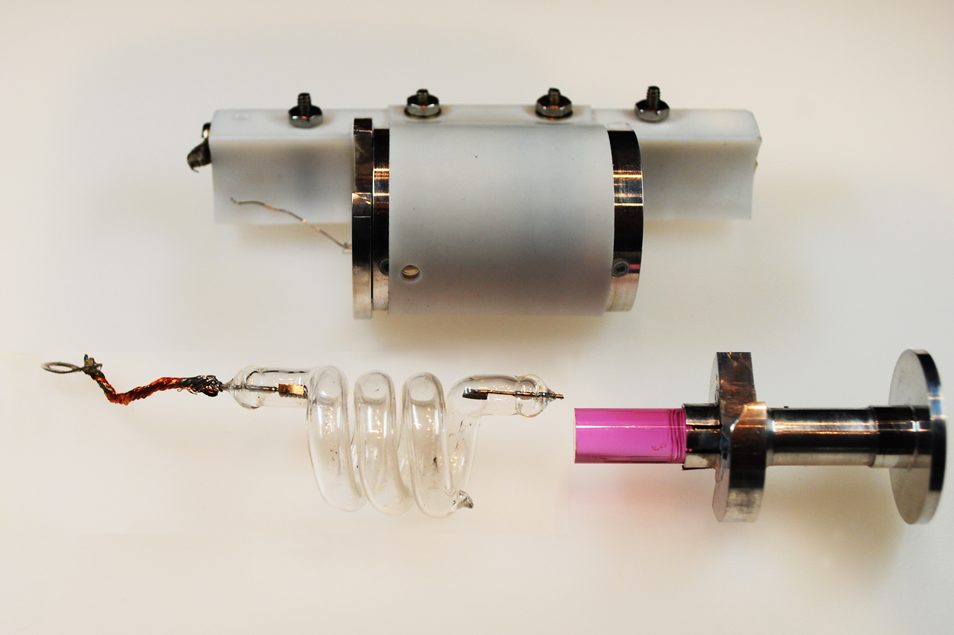

13. Ruby Laser

The ruby laser wasn’t just another invention—it was a physics revolution in a tube. The synthetic ruby crystal amplified light into something no one had seen before: a coherent, intense beam that made scientists’ jaws drop. Tech nerds went crazy for this thing. Why? Because it wasn’t just bright—it was precise, focused, and packed with potential.

Constructed with a 1 cm by 2 cm synthetic ruby rod mirrored at both ends, with flashlamps to excite the medium, this laser produced a pure red beam that finally proved Albert Einstein’s 1917 theory of stimulated emission. Early adopters saw past the glowing red beam to its future in everything from optical surgery to communications and manufacturing.

12. Unix Operating System

Unix hit the computing scene like a bomb. Before it, computers were basically expensive calculators that only one person could use at a time. Unix said “screw that” and introduced a multi-user system that let programmers share resources without tripping over each other. Its hierarchical file system wasn’t just smart—it was genius, making data management actually make sense. Look at your Mac or Linux machine today. That’s Unix DNA right there. Its principles didn’t just influence modern computing—they built it.

11. Nimrod Computer

Remember when gaming meant chess boards and card tables? The Nimrod computer showed up at the 1951 Festival of Britain playing Nim when most people thought computers were just fancy adding machines. This beast used electrical circuits to solve puzzles, blowing minds and showing that machines could think—at least in a basic way. While everyone else was figuring out how to make computers calculate faster, Nimrod was already playing games. Its impact went beyond entertainment, pushing both coding practices and circuit design forward. Early tech fans didn’t just see a machine—they saw the future.

10. PDP-8 Mini Computer

Breaking all previous computational rules, the PDP-8 mini computer changed everything. Before it, computers lived in climate-controlled rooms and cost as much as houses. The PDP-8 was small enough to sit on a desk and cheap enough that normal labs and schools could actually buy one. This wasn’t just a smaller computer—it was a computing revolution that put processing power in the hands of regular scientists and educators. Without this critical step, personal computing might have taken decades longer to develop.

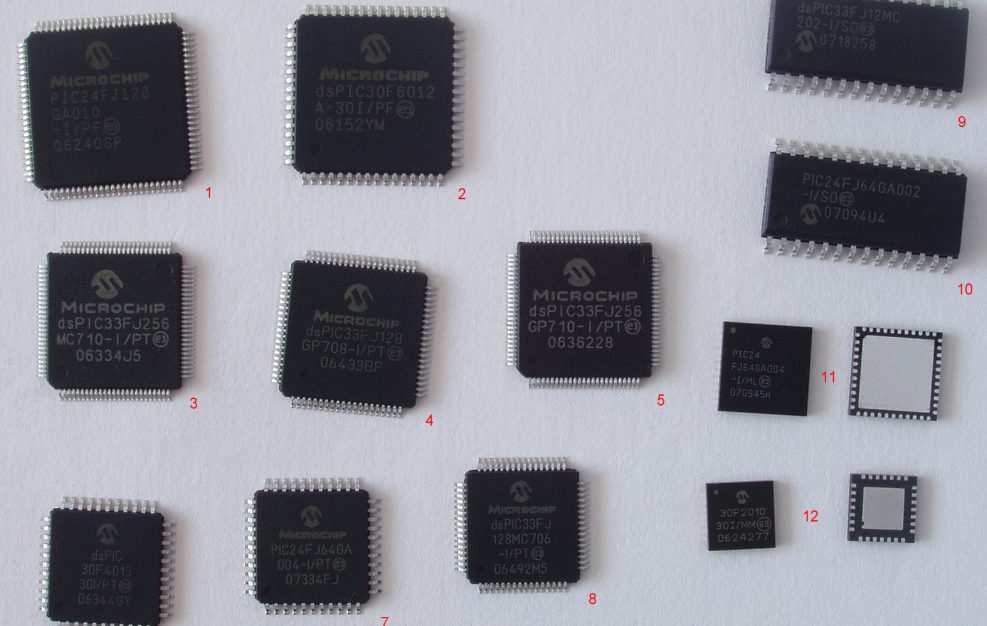

9. Microchips

Microchips changed everything. Period. Before integrated circuits, computers filled rooms with hot, power-hungry components. Microchips packed all that into something smaller than your fingernail. Suddenly, computers could be faster, smaller, and use way less power. This wasn’t just a technical upgrade—it was the key that unlocked modern computing. Every device you own today—from your phone to your microwave—owes its existence to this 60s breakthrough.

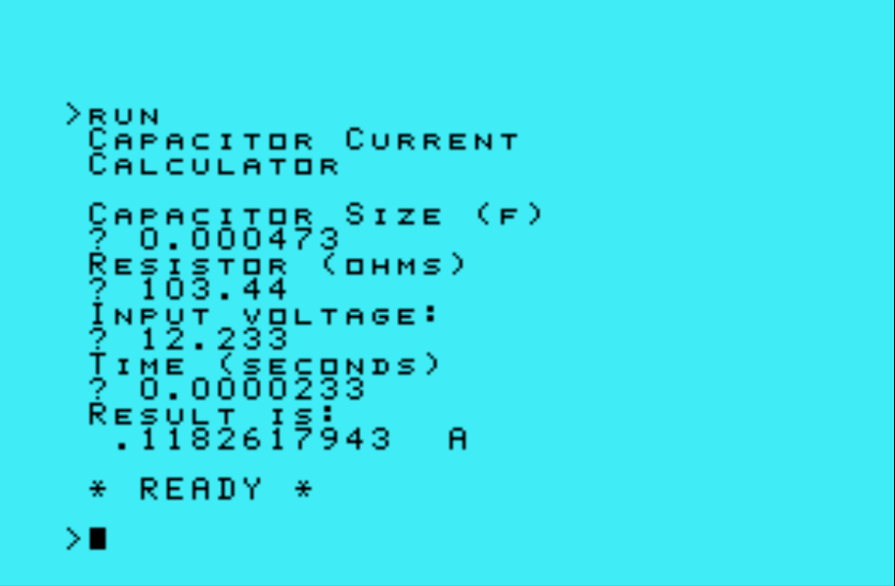

8. BASIC Code

BASIC code broke down walls. Before it, programming was for the elite few who could speak machine language. BASIC (Beginner’s All-purpose Symbolic Instruction Code) made coding accessible to anyone with a logical mind. Students who would have been locked out of computing could suddenly write programs themselves. This language didn’t just teach a generation to code—it democratized computing itself, setting the stage for the software explosion that followed.

7. Apollo Guidance Computer

Few people realize the Apollo missions weren’t just about planting flags on the Moon—they were about solving impossible computing problems. These missions needed guidance systems that couldn’t fail, in packages that couldn’t weigh much, using less computing power than your digital watch has today. The space program pushed microchips to their limits, forcing innovations that eventually filtered down to consumer tech. When Neil Armstrong took that small step, he was walking on the shoulders of computing giants.

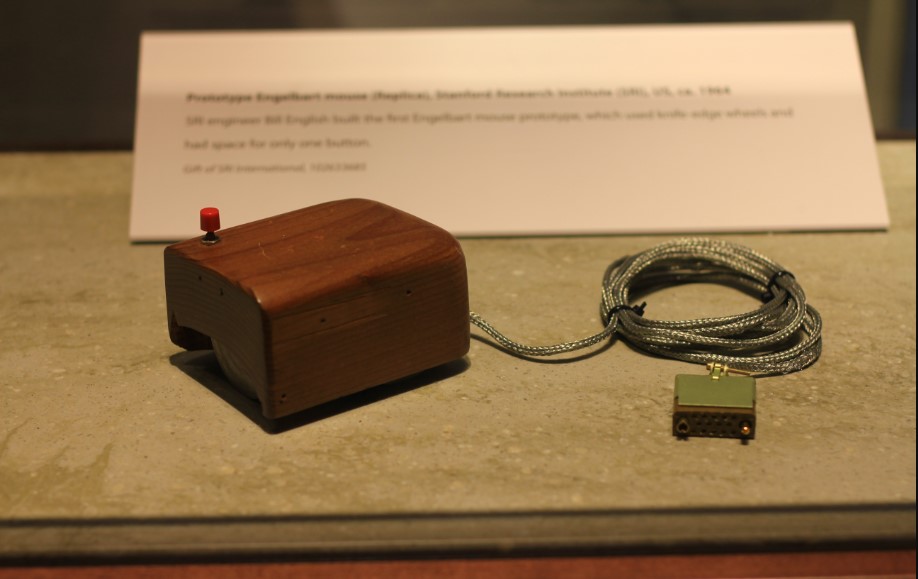

6. First Computer Mouse

Doug Engelbart’s computer mouse changed how humans talk to machines. Before it, people communicated with computers through punch cards and keyboards. The mouse—a wooden, boxy thing back then—let users point directly at what they wanted. This wasn’t just convenient—it was revolutionary, making computers accessible to people who weren’t technical experts. That wooden box evolved into the sleek devices we use today, but the core concept remains unchanged: direct, intuitive control. It is just one of many amazing gadgets that were born from the 60s.

5. Arthur C. Clarke Sci-Fi Classics

Have you ever wondered who predicted satellite communications decades before they existed? Arthur C. Clarke didn’t just write science fiction—he wrote science prediction. Books like “2001: A Space Odyssey” weren’t just entertainment; they were roadmaps for future technology. Clarke understood both science and human nature, creating stories that felt not just possible but inevitable. His concepts—from communication satellites to AI—inspired real scientists and engineers to turn fiction into reality. Clarke didn’t just see the future—he helped create it by showing what was possible.

4. IBM 1311

The IBM 1311 disk drive broke data free from its cage. For the first time, storage became truly portable—a revolutionary 2 MB at a time. Users could remove hard drives, transport them, and access their data elsewhere. This wasn’t just convenient—it fundamentally changed how people thought about data. Information wasn’t tied to a specific machine anymore; it could move. This concept forms the backbone of how we handle data today, from cloud storage to USB drives.

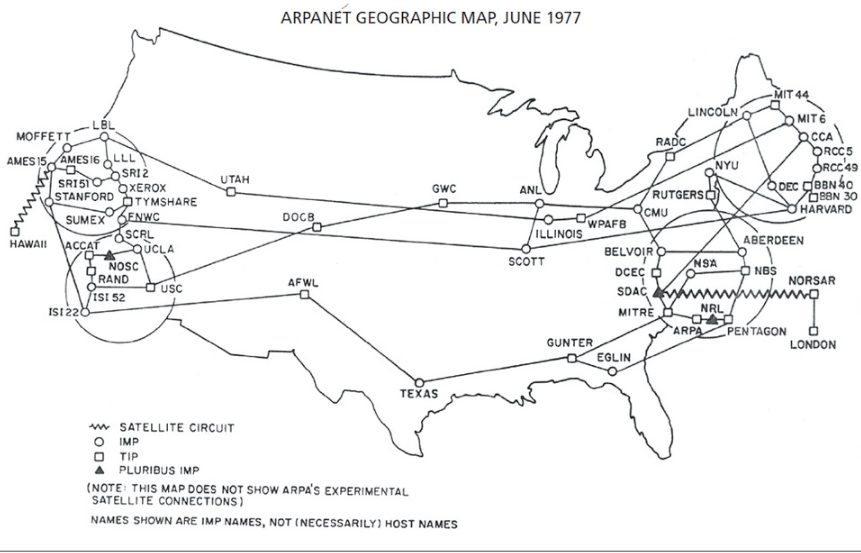

3. Origins of ARPANET

Created in 1969, ARPANET wasn’t just a network—it was the network. It connected computers across vast distances when most machines couldn’t even talk to others in the same room. This wasn’t just a technical achievement—it was a vision of a connected world. The project solved communication problems we still grapple with today, laying groundwork for everything from email to streaming video. Without ARPANET, the internet as we know it wouldn’t exist.

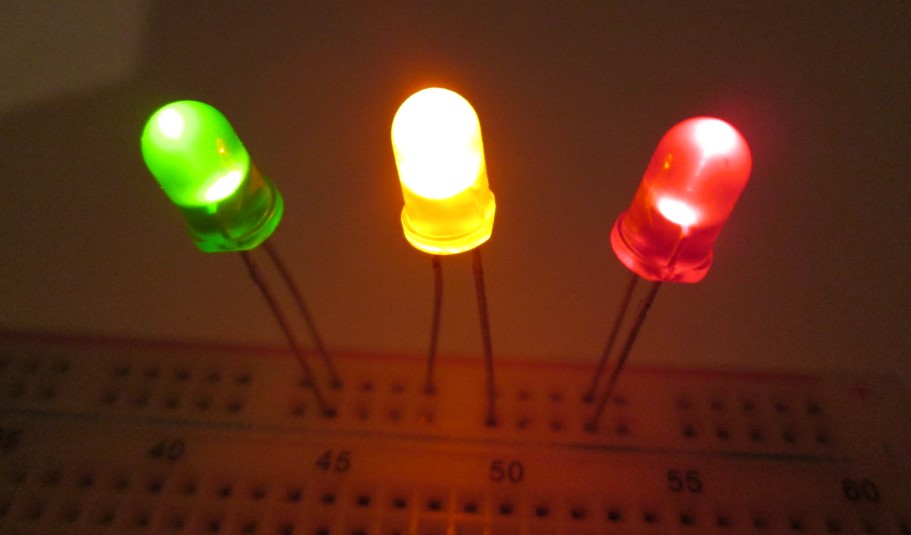

2. The First LEDs

LEDs started small—literally. These tiny light emitters initially just glowed red, serving as simple indicators on electronics. But their efficiency and compact size signaled a lighting revolution in the making. Early tech enthusiasts recognized their potential while mainstream consumers just saw blinking lights. LEDs didn’t just replace bulbs—they enabled entirely new kinds of displays and devices. From your TV screen to traffic lights, these small components changed how we see the world.

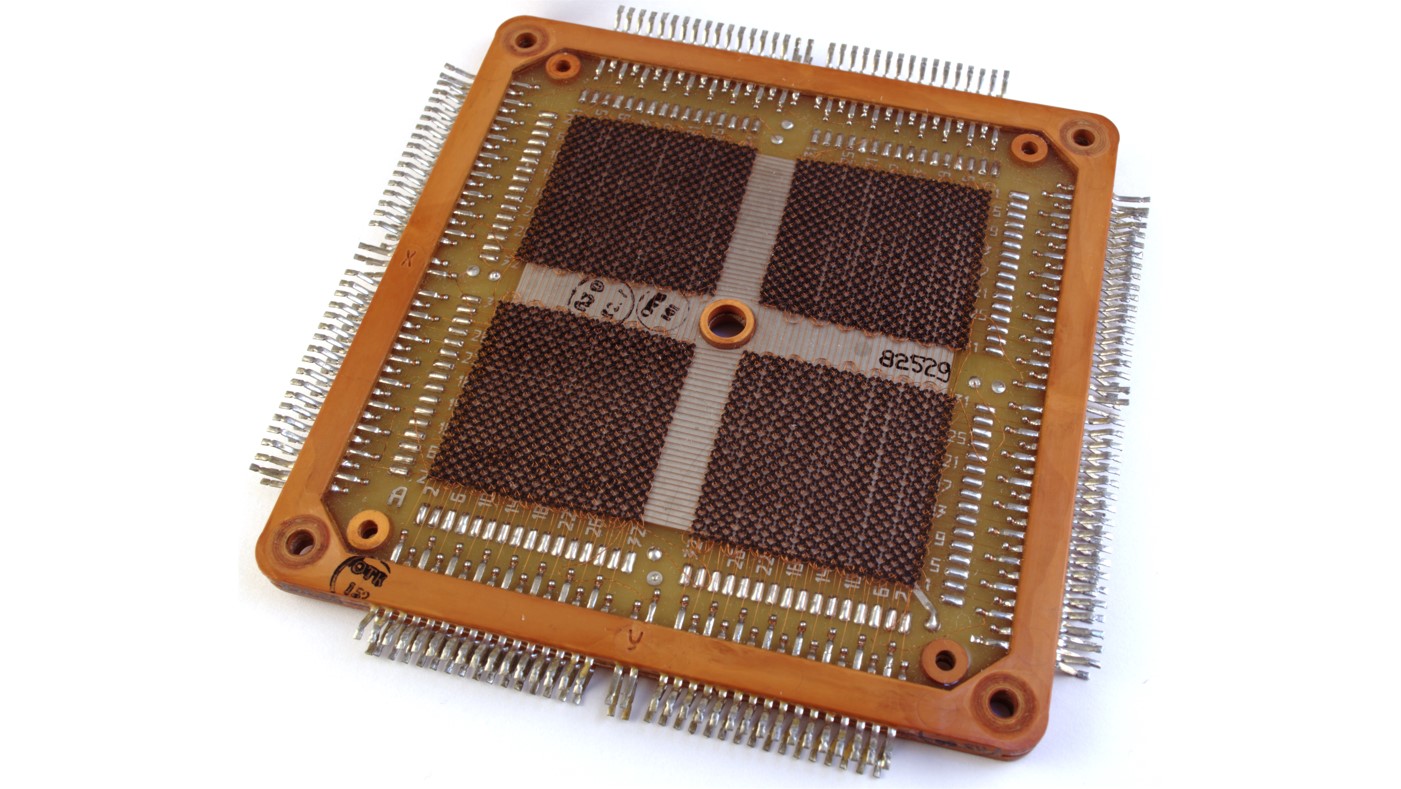

1. Magnetic Core Memory

Magnetic core memory solved computing’s amnesia problem. Using tiny iron rings to store bits of data, these arrays provided reliable storage when computers would otherwise forget everything at power-off. Each core held just one bit, woven by hand into complex matrices—early computing’s equivalent of textile art. This technology wasn’t just storage—it was the breakthrough that made practical computing possible. Without the stability of core memory, the computer revolution might have stalled before it began.